#syscall paging system

Explore tagged Tumblr posts

Text

Syscall Coaster Pager GP-101R

One of our most popular guest pager model.

This coaster guest pager has thin body and mat finishing surface which makes the pager look more premium.

Add your logo into big branding sticker space on top.

Black & white which are basic color that fits anywhere.

PRODUCT SPECIFICATION

Description

One of our most popular guest pager model.

This coaster guest pager has thin body and mat finishing surface which makes the pager look more premium.

Add your logo into big branding sticker space on top.

Black & white which are basic color that fits anywhere.

Specification

DimensionØ95 x H15.5mmWeight79gColorBlackFrequencyFSK/433.42MHzPower SourceDC 8V/3A & LIPOLYMER battery (Rechargeable)Adapter Input100–240V~, 50/60Hz, 0.6A MAXCompatibilityMulti transmitter (GP-2000T) & Repeater (SRT-8200)

Features

Easily recognize the call with buzzer, vibration & light

Big branding sticker space

Shock-absorbing silicone ring applied

Space saving stacker design

Benefits

Improve customer satisfaction

Increase work efficiency

Reduce the work fatigue

Reduce staff cost

SYSTEM MAP

After ordering, hand over the guest pager to customers.

Call the guest pager by using the transmitter.

Place the guest pager back.

If You Want More Information Click Here Or Call Us

Tel: +971 6 5728 767

Mobile no: +971 56 474 6715

#coaster pager#syscall paging system#wireless#calling buttons#pagingsystem#restaurant#dubai#solutions#abudhabi#calling system#uae

0 notes

Text

How Wireless Paging System Works in Restaurants?

A restaurant paging system is a communication tool used to enhance efficiency and customer service in a restaurant setting. This system typically involves the use of pagers or other wireless devices to notify customers when their table is ready or when their order is ready to be picked up.

What are Pagers?

Pagers are portable devices given to customers upon arrival or order placement. They are typically small, lightweight, and have a display screen. Pagers can vibrate, beep, or display a message to alert customers. There are different models of syscall pagers.

Coaster Pager

Doughnut Pager

Diamond Pager

All in 1 Type Pagers

How Wireless Paging System Will Work

When a customer will give you an order, hand over the guest pager to the customer.

When the order will be ready the staff calls the customer with pager through the transmitter

The customer comes to the counter to collect his food and hand over the pager to the staff.

0 notes

Text

This Week in Rust 454

Hello and welcome to another issue of This Week in Rust! Rust is a programming language empowering everyone to build reliable and efficient software. This is a weekly summary of its progress and community. Want something mentioned? Tweet us at @ThisWeekInRust or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub. If you find any errors in this week's issue, please submit a PR.

Updates from Rust Community

Official

Increasing the glibc and Linux kernel requirements

Project/Tooling Updates

rust-analyzer changelog #140

GCC Rust Monthly Report #19 July 2022

This Month in hyper: July 2022

Bevy 0.8

This week in Fluvio #41: The programmable streaming platform

Fornjot (code-first CAD in Rust) - Weekly Release - 2022-W31

Zellij 0.31.0: Sixel support, search panes and custom status-bar keybindings

Ogma v0.5 Release

Slint UI crate weekly updates

HexoSynth 2022 - Devlog #7 - The DSP JIT Compiler

This week in Databend #53: A Modern Cloud Data Warehouse for Everyone

Observations/Thoughts

Rust linux kernel development

Proc macro support in rust-analyzer for nightly rustc versions

Manage keybindings in a Rust terminal application

Safety

Paper Review: Safe, Flexible Aliasing with Deferred Borrows

Uncovering a Blocking Syscall

nt-list: Windows Linked Lists in idiomatic Rust

[audio] Beyond the Hype: Most-loved language – does Rust justify the hype?

Rust Walkthroughs

Patterns with Rust types

Fully generic recursion in Rust

Advanced shellcode in Rust

STM32F4 Embedded Rust at the HAL: Analog Temperature Sensing using the ADC

[video] Are we web yet? Our journey to Axum

[video] Build your Rust lightsaber (my Rust toolkit recommendations)

[video] Rust Tutorial Full Course

[video] Bevy 0.7 to 0.8 migration guide

Research

RRust: A Reversible Embedded Language

Miscellaneous

Meta approves 4 programming languages for employees and devs

[DE] Meta setzt auf die Programmiersprachen C++, Python, Hack und neuerdings Rust

Crate of the Week

This week's crate is lending-iterator, a type similar to std::iter::Iterator, but with some type trickery that allows it to .windows_mut(_) safely.

Thanks to Daniel H-M for the self-nomination!

Please submit your suggestions and votes for next week!

Call for Participation

Always wanted to contribute to open-source projects but didn't know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

If you are a Rust project owner and are looking for contributors, please submit tasks here.

EuroRust Call for Papers is Open

pq-sys - Setup a CI

ockam - Make ockam message send ... support - to represent STDIN in its addr argument

ockam - Display a node's default identifier in output of ockam node create | show | list commands

ockam - Refactor ockam portal ... commands into ockam tcp-... commands

Updates from the Rust Project

391 pull requests were merged in the last week

Add diagnostic when using public instead of pub

Expose size_hint() for TokenStream's iterator

suggest dereferencing index when trying to use a reference of usize as index

suggest removing a semicolon and boxing the expressions for if-else

suggest removing the tuple struct field for the unwrapped value

improve cannot move out of error message

don't ICE on invalid dyn calls

chalk: solve auto traits for closures

add Self: ~const Trait to traits with #[const_trait]

miri: add default impls for FileDescriptor methods

miri: use cargo_metadata in cargo-miri

miri: use real exec on cfg(unix) targets

codegen: use new {re,de,}allocator annotations in llvm

use FxIndexSet for region_bound_pairs

lexer improvements

optimize UnDerefer

implement network primitives with ideal Rust layout, not C system layout

fix slice::ChunksMut aliasing

optimize vec::IntoIter::next_chunk impl

cargo: support for negative --jobs parameter, counting backwards from max CPUs

rustdoc: add support for #[rustc_must_implement_one_of]

rustdoc: align invalid-html-tags lint with commonmark spec

rustfmt: nicer skip context for macro/attribute

clippy: move assertions_on_result_states to restriction

clippy: read and use deprecated configuration (as well as emitting a warning)

clippy: remove "blacklist" terminology

clippy: unwrap_used: don't recommend using expect when the expect_used lint is not allowed

rust-analyzer: find original ast node before compute ref match

rust-analyzer: find standalone proc-macro-srv on windows too

rust-analyzer: publish extension for 32-bit ARM systems

rust-analyzer: calculate completions after type anchors

rust-analyzer: do completions in path qualifier position

rust-analyzer: don't complete marker traits in expression position

rust-analyzer: fix pattern completions adding unnecessary braces

rust-analyzer: complete path of existing record expr

Rust Compiler Performance Triage

Call for Testing

An important step for RFC implementation is for people to experiment with the implementation and give feedback, especially before stabilization. The following RFCs would benefit from user testing before moving forward:

No RFCs issued a call for testing this week.

If you are a feature implementer and would like your RFC to appear on the above list, add the new call-for-testing label to your RFC along with a comment providing testing instructions and/or guidance on which aspect(s) of the feature need testing.

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

No RFCs were approved this week.

Final Comment Period

Every week, the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

No RFCs entered Final Comment Period this week.

Tracking Issues & PRs

[disposition: merge] relate closure_substs.parent_substs() to parent fn in NLL

[disposition: merge] Don't derive PartialEq::ne.

[disposition: merge] Guarantees of content preservation on try_reserve failure?

[disposition: merge] Partially stabilize std::backtrace from backtrace

[disposition: merge] Tracking Issue for ptr_const_cast

New and Updated RFCs

No New or Updated RFCs were created this week.

Upcoming Events

Rusty Events between 2022-08-03 - 2022-08-31 🦀

Virtual

2022-08-03 | Virtual (Indianapolis, IN, US) | Indy Rust

Indy.rs - with Social Distancing

2022-08-03 | Virtual (Stuttgart, DE) | Rust Community Stuttgart

Rust-Meetup

2022-08-05 | Virtual + Portland, OR, US | RustConf

RustConf 2022

2022-08-09 | Virtual (Dallas, TX, US) | Dallas Rust

Second Tuesday

2022-08-09 | Virtual (Myrtle Point, OR, US) | #EveryoneCanContribute Cafe

Summer Chill & Learn: including OpenTelemetry getting started with Rust

2022-08-10 | Virtual (Boulder, CO, US) | Boulder Elixir and Rust

Monthly Meetup

2022-08-11 | Virtual (Nürnberg, DE) | Rust Nuremberg

Rust Nürnberg online

2022-08-12 | Virtual + Tokyo, JP | tonari

Tokyo Rust Game Hack 2022 edition: The Bombercrab Challenge

2022-08-13 | Virtual | Rust Gamedev

Rust Gamedev Monthly Meetup

2022-08-16 | Virtual (Washington, DC, US) | Rust DC

Mid-month Rustful

2022-08-17 | Virtual (Vancouver, BC, CA) | Vancouver Rust

Rust Study/Hack/Hang-out

2022-08-18 | Virtual (Charlottesville, VA, US) | Charlottesville Rust Meetup

Hierarchical Task Network compiler written in Rust

2022-08-18 | Virtual (Stuttgart, DE) | Rust Community Stuttgart

Rust-Meetup

2022-08-24 | Virtual + Wellington, NZ | Rust Wellington

Flywheel Edition: 3 talks on Rust!

2022-08-30 | Virtual + Dallas, TX, US | Dallas Rust

Last Tuesday

Asia

2022-08-12 | Tokyo, JP + Virtual | tonari

Tokyo Rust Game Hack 2022 edition: The Bombercrab Challenge

Europe

2022-08-30 | Utrecht, NL | Rust Nederland

Run Rust Anywhere

North America

2022-08-05 | Portland, OR, US + Virtual | RustConf

RustConf 2022

2022-08-06 | Portland, OR, US | Rust Project Teams

RustConf 2022 PostConf Unconf | Blog post

2022-08-10 | Atlanta, GA, US | Rust Atlanta

Grab a beer with fellow Rustaceans

2022-08-11 | Columbus, OH, US| Columbus Rust Society

Monthly Meeting

2022-08-16 | San Francisco, CA, US | San Francisco Rust Study Group

Rust Hacking in Person

2022-08-23 | Toronto, ON, CA | Rust Toronto

WebAssembly plugins in Rust

2022-08-25 | Ciudad de México, MX | Rust MX

Concurrencia & paralelismo con Rust

2022-08-25 | Lehi, UT, US | Utah Rust

Hello World Cargo Crates Using Github Actions with jojobyte and Food!

Oceania

2022-08-24 | Wellington, NZ + Virtual | Rust Wellington

Flywheel Edition: 3 talks on Rust!

2022-08-26 | Melbourne, VIC, AU | Rust Melbourne

August 2022 Meetup

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Jobs

Please see the latest Who's Hiring thread on r/rust

Quote of the Week

❤️🦀❤️

100,000 issues filled with love, compassion and a wholesome community. Thank you, Rust community, for being one of the most, if not straight out the most, welcoming programming communities out there. Thank you, Rust teams, for the tireless hours you spend every day on every aspect of this project. Thank you to the Rust team alumni for the many hours spent growing a plant and the humility of passing it to people you trust to continue taking care of it. Thank you everyone for RFCs, giving voice to the community, being those voices AND listening to each other.

This community has been and continue to be one of the best I have ever had the pleasure of being a part of. The language itself has many things to love and appreciate about it, from the humane error messages to giving the people the power to express high performance code without sacrificing readability for the ones to come after us. But nothing, truly nothing, takes the cake as much as the community that's building it, answering questions, helping and loving each other. Every single day.

Congratulations everyone for 100,000 issues and PRs! And thank you for being you. Because Rust is Beautiful, for having you as part of it.

To the times we spent together and the many more to come!

– mathspy on the rust-lang/rust github

Thanks to Sean Chen for the suggestion!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, cdmistman, ericseppanen, extrawurst, andrewpollack, U007D, kolharsam, joelmarcey, mariannegoldin.

Email list hosting is sponsored by The Rust Foundation

Discuss on r/rust

0 notes

Text

Slow database? It might not be your fault

<rant>

Okay, it usually is your fault. If you logged the SQL your ORM was generating, or saw how you are doing joins in code, or realised what that indexed UUID does to your insert rate etc you’d probably admit it was all your fault. And the fault of your tooling, of course.

In my experience, most databases are tiny. Tiny tiny. Tables with a few thousand rows. If your web app is slow, its going to all be your fault. Stop building something webscale with microservices and just get things done right there in your database instead. Etc.

But, quite often, each company has one or two databases that have at least one or two large tables. Tables with tens of millions of rows. I work on databases with billions of rows. They exist. And that’s the kind of database where your database server is underserving you. There could well be a metric ton of actual performance improvements that your database is leaving on the table. Areas where your database server hasn’t kept up with recent (as in the past 20 years) of regular improvements in how programs can work with the kernel, for example.

Over the years I’ve read some really promising papers that have speeded up databases. But as far as I can tell, nothing ever happens. What is going on?

For example, your database might be slow just because its making a lot of syscalls. Back in 2010, experiments with syscall batching improved MySQL performance by 40% (and lots of other regular software by similar or better amounts!). That was long before spectre patches made the costs of syscalls even higher.

So where are our batched syscalls? I can’t see a downside to them. Why isn’t linux offering them and glib using them, and everyone benefiting from them? It’ll probably speed up your IDE and browser too.

Of course, your database might be slow just because you are using default settings. The historic defaults for MySQL were horrid. Pretty much the first thing any innodb user had to do was go increase the size of buffers and pools and various incantations they find by googling. I haven’t investigated, but I’d guess that a lot of the performance claims I’ve heard about innodb on MySQL 8 is probably just sensible modern defaults.

I would hold tokudb up as being much better at the defaults. That took over half your RAM, and deliberately left the other half to the operating system buffer cache.

That mention of the buffer cache brings me to another area your database could improve. Historically, databases did ‘direct’ IO with the disks, bypassing the operating system. These days, that is a metric ton of complexity for very questionable benefit. Take tokudb again: that used normal buffered read writes to the file system and deliberately left the OS half the available RAM so the file system had somewhere to cache those pages. It didn’t try and reimplement and outsmart the kernel.

This paid off handsomely for tokudb because they combined it with absolutely great compression. It completely blows the two kinds of innodb compression right out of the water. Well, in my tests, tokudb completely blows innodb right out of the water, but then teams who adopted it had to live with its incomplete implementation e.g. minimal support for foreign keys. Things that have nothing to do with the storage, and only to do with how much integration boilerplate they wrote or didn’t write. (tokudb is being end-of-lifed by percona; don’t use it for a new project 😞)

However, even tokudb didn’t take the next step: they didn’t go to async IO. I’ve poked around with async IO, both for networking and the file system, and found it to be a major improvement. Think how quickly you could walk some tables by asking for pages breath-first and digging deeper as soon as the OS gets something back, rather than going through it depth-first and blocking, waiting for the next page to come back before you can proceed.

I’ve gone on enough about tokudb, which I admit I use extensively. Tokutek went the patent route (no, it didn’t pay off for them) and Google released leveldb and Facebook adapted leveldb to become the MySQL MyRocks engine. That’s all history now.

In the actual storage engines themselves there have been lots of advances. Fractal Trees came along, then there was a SSTable+LSM renaissance, and just this week I heard about a fascinating paper on B+ + LSM beating SSTable+LSM. A user called Jules commented, wondered about B-epsilon trees instead of B+, and that got my brain going too. There are lots of things you can imagine an LSM tree using instead of SSTable at each level.

But how invested is MyRocks in SSTable? And will MyRocks ever close the performance gap between it and tokudb on the kind of workloads they are both good at?

Of course, what about Postgres? TimescaleDB is a really interesting fork based on Postgres that has a ‘hypertable’ approach under the hood, with a table made from a collection of smaller, individually compressed tables. In so many ways it sounds like tokudb, but with some extra finesse like storing the min/max values for columns in a segment uncompressed so the engine can check some constraints and often skip uncompressing a segment.

Timescaledb is interesting because its kind of merging the classic OLAP column-store with the classic OLTP row-store. I want to know if TimescaleDB’s hypertable compression works for things that aren’t time-series too? I’m thinking ‘if we claim our invoice line items are time-series data…’

Compression in Postgres is a sore subject, as is out-of-tree storage engines generally. Saying the file system should do compression means nobody has big data in Postgres because which stable file system supports decent compression? Postgres really needs to have built-in compression and really needs to go embrace the storage engines approach rather than keeping all the cool new stuff as second class citizens.

Of course, I fight the query planner all the time. If, for example, you have a table partitioned by day and your query is for a time span that spans two or more partitions, then you probably get much faster results if you split that into n queries, each for a corresponding partition, and glue the results together client-side! There was even a proxy called ShardQuery that did that. Its crazy. When people are making proxies in PHP to rewrite queries like that, it means the database itself is leaving a massive amount of performance on the table.

And of course, the client library you use to access the database can come in for a lot of blame too. For example, when I profile my queries where I have lots of parameters, I find that the mysql jdbc drivers are generating a metric ton of garbage in their safe-string-split approach to prepared-query interpolation. It shouldn’t be that my insert rate doubles when I do my hand-rolled string concatenation approach. Oracle, stop generating garbage!

This doesn’t begin to touch on the fancy cloud service you are using to host your DB. You’ll probably find that your laptop outperforms your average cloud DB server. Between all the spectre patches (I really don’t want you to forget about the syscall-batching possibilities!) and how you have to mess around buying disk space to get IOPs and all kinds of nonsense, its likely that you really would be better off perforamnce-wise by leaving your dev laptop in a cabinet somewhere.

Crikey, what a lot of complaining! But if you hear about some promising progress in speeding up databases, remember it's not realistic to hope the databases you use will ever see any kind of benefit from it. The sad truth is, your database is still stuck in the 90s. Async IO? Huh no. Compression? Yeah right. Syscalls? Okay, that’s a Linux failing, but still!

Right now my hopes are on TimescaleDB. I want to see how it copes with billions of rows of something that aren’t technically time-series. That hybrid row and column approach just sounds so enticing.

Oh, and hopefully MyRocks2 might find something even better than SSTable for each tier?

But in the meantime, hopefully someone working on the Linux kernel will rediscover the batched syscalls idea…? ;)

2 notes

·

View notes

Text

Project: Stealth pt.2 electric boogaloo

We’ve hidden from lsmod, but how do we hide our friendly device in /dev/? Lets find out what makes ls tick by running strace on it.

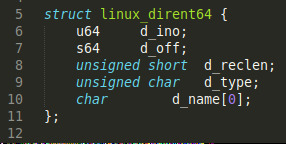

There are a lot of system calls involved, but the one that stands out is getdents64. The man page tells us these are not the interfaces we’re interested in, so we must be on the right track. It also tells us that this syscall reads linux_dirent structures from a directory. We find this struct in linux/dirent.h.

If we could intercept this syscall, we could modify the resulting dirent structures and remove any ones that mention us. It’s time to hijack some system calls.

https://ritcsec.wordpress.com/2017/05/17/modern-linux-rootkits/

This was a helpful resource I used during this part. We need to find the location of the syscall table in memory, but its symbol is not exported in my kernel version. However, the symbols for other syscalls such as sys_open are exported. This means we can simply bruteforce search through all of kernel memory to find its location (there are smarter ways of doing this, such as finding the System.map file which has its address). Below, PAGE_OFFSET gives the start of kernel memory and ULONG_MAX gives the end of it.

The syscall table is in read only memory, but we have convenient access to a control register, cr0, which the CPU uses to decide whether it can write to a specific memory address (specifically, it checks the 16th bit of this register). We have functions from arch/x86/include/asm/paravirt.h which allow us to modify this register, and therefore temporarily disable write protection and modify the syscall table with our own functions, ‘hooking’ into it..

So we write our own getdents function (getdents64 on my machine), replace the appropriate sycall table slot with our function pointer while saving the original. Then inside our new function, we call the original syscall function to perform the operation but then modify the resulting struct, removing any files that reveal us as well as adjusting the length reported (thanks to https://github.com/RITRedteam/goofkit for the inspiration). The results:

After installing the module, we see that any files mentioning us will not be listed, including in /dev/. However, the files are still there, nothing stops us from giving commands to the rootkit device. Also of note is that our module is still visible to procfs and sysfs, but we have already solved that problem.

1 note

·

View note

Text

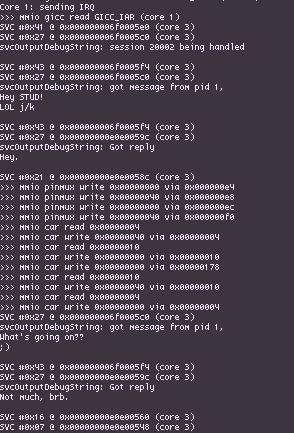

Nintendo Switch Kernel Patching and Emulation - Achieving Maximum Modification Flexibility with Minimal Replacement

Ever since shofEL2 was released earlier this year it's been interesting to watch how different custom firmwares have tackled the prospect of modifying Nintendo's firmware for both homebrew and piracy applications, and as someone who hasn't really had much stake in that race I feel like it's interesting to watch how different solutions tackle different problems, but at the same time since I do have a stake in a few places (namely, Smash Bros modding, vulnerability hunting, personal projects) I ended up in a situation where I needed to sort of 'set up camp' with Nintendo's Horizon microkernel and have a comfortable working environment for modification.

Binary Patching is Weird, and Horizon makes it weirder.

Probably the biggest difficulty in Switch development I would say is iteration time, followed by a general difficulty in actually modifying anything; even just booting modified core services (ie filesystem management, service management, spl which talks to the EL0 TrustZone SPL [commonly misnomered as the security processer liaison...?], the boot service which shows boot logos and battery indications, ...) requires, at a minimum, reimplementing Nintendo's package1 implementation which boots TrustZone and patches for TrustZone to disable signatures on those services and kernel. Beyond the core services, modifying executables loaded from eMMC requires either patching Loader, patching FS, reimplementing Loader, or something else.

Unfortunately with binary patching there generally isn't a silver bullet for things, generally speaking the three methods of modifications are userland replacement, userland patching, and kernel patching. The first two are currently used for Atmosphere, but the solution I felt would be the most robust and extensible for the Nintendo Switch was kernel patching. Here's a quick rundown on the pros and cons for each method:

Userland Replacement

- Requires rewriting an entire functionally identical executable - Often not feasible for larger services such as FS - Can easily break between firmware updates, especially if new functionality is added or services split. This makes it difficult to maintain when the OS is in active development. - Added processes can potentially leave detectable differences in execution (different PIDs, different order of init, etc) + Easier to add functionality, since you control all code + Can operate easily on multiple firmwares + Can serve as an open-source reference for closed-source code

Userland Patching

- Adding additional code and functionality can be difficult, since expanding/adding code pages isn't always feasible without good control of Loader - Finding good, searchable signatures can often be difficult - Can easily break between firmware updates, especially if functionality or compilers are tweaked + With good signatures, can withstand firmware updates which add functionality + Often has less maintenance between updates when functionality does change; patching is usually easier than writing new code + Harder to detect unless the application checks itself or others check patched applications

Kernel Patching

- Greater chance of literally anything going wrong (concurrency, cache issues, ...), harder to debug than userland - Minimal (formerly no) tooling for emulating the kernel, vs userland where Mephisto, yuzu, etc can offer assistance - Can easily break between firmware updates, and is more difficult (but not impossible) to develop a one-size-fits-all-versions patch since kernel objects change often - Easier to have adverse performance impacts with syscall hooks + Harder to detect modifications from userland; userland cannot read kernel and checking if kernel has tampered with execution state can be trickier + Updating kernel object structures can take less time than updating several rewritten services, since changes are generally only added/removed fields + Direct access to kernel objects makes more direct alterations easier (permission modification, handle injection, handle object swapping). + Direct access to hardware registers allows for UART printf regardless of initialization state and without IPC + Hooking for specific IPC commands avoids issues with userland functionality changes, and in most cases IPC commands moving to different IDs only disables functionality vs creating an unbootable system.

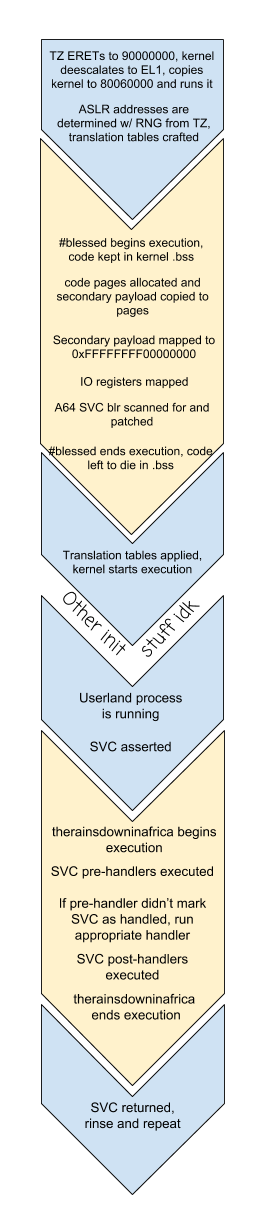

mooooooo, a barebones Tegra X1 emulator for Horizon

Obviously the largest hangup with kernel patching is debugging, the Switch has RAM to spare (unlike 3DS) and setting up an intercept for userland exceptions isn't impossible to do by trial and error using SMC panics/UART and a lot of patience, but for ease of use and future research I really, really wanted an emulator to experiment with the Switch kernel. I ended up building a quick-n-dirty emulator in Unicorn, and with a few processes it works rather well but it still struggles with loading Nintendo's core processes currently, but for a small and contained test environment (two processes talking to each other and kernel patches watching them), I would say I had reached my goal and it was enough to at least be able to work quickly and sanely on my intercept.

For the most part, the Switch Horizon microkernel doesn't actually use much of the Tegra MMIO; it uses some of the more obvious ARM components like GIC for interrupts, and it also has a large initialization sequence for the memory controller, but as long as interrupts are functional, timers work, MC returns some correct values and SMC return values are all correct, it boots into userland without issue.

I actually found that emulating multiple cores in Unicorn actually isn't all that difficult, provided you're using a compiled language where uc_mem_map_ptr works. Rather than messing with threads, I opted for a round-robin scheduling scheme where I run each Unicorn instance for a set number of instructions, with memory being mapped and unmapped from the running core so that any cached data gets written out before the next core has its turn. A lot of modifications/cherry-picking to Unicorn did have to be made in order to properly support interrupts, certain coprocessor registers (ie core indexes), translation tables (for uc_mem_read/uc_mem_write, vaddr->paddr translation, and just in general there were some odd issues).

Patching Horizon for Syscall MiTM

With a decent environment for modifying kernel, the next issue really just became actually bootstrapping an SVC intercept. Figuring out where exception vectors are located isn't difficult with the emulator handy, but really the issue becomes

1. Extra code has to be loaded and copied by TrustZone, along with kernel 2. New code needs to be placed in a safe location and then given memory mappings 3. Existing code needs to be modified with hooks pointing to the new code

To guide the kernel towards salvation I ended up hooking just before translation table addresses are written into coprocessor registers. This way, the payload can allocate pages and copy code from less-safe soon-to-be-condemned .bss memory for the bulk of the SVC interception code, set up those pages in the translation tables, and then patch the ARM64 SVC handler to actually jump to the new mapping. For ease of development, the mapping is given a constant address along with any hardware registers which it needs to access, as opposed to being randomized like the rest of the kernel.

In the end, patched the kernel executes as follows:

Since hashtagblessed is able to map UART, CAR and PINMUX registers into translation tables, getting communication from the right Joy-Con rail using existing BPMP-based drivers was fairly straightforward, and even without any source code to reference there's a fairly basic driver in TrustZone. Between the transition from emulation to hardware however, I had kept an SMC to print information to the console, but I ultimately ended up using UART even in emulation. On hardware, I got by using UART-B (the right Joy-Con railed) for a while, but had to switch to UART-A (internal UART lines for debugging) due to GPIO issues once HOS tried to initialize Joy-Con.

Identifying IPC Packets, Accurate Results With Simple Tools

With therainsdowninafrica loaded, hooked in and blessed, the next step is actually being able to identify specific IPC requests sent through svcSendSyncRequest, and doing this requires getting our hands dirty with kernel objects. Userland is able to utilize different kernel objects indirectly through the use of handles and system calls. Each KProcess has a handle table which maps handles to the underlying object structures, so translating handles to KObjects is simply a matter of checking the table for a given handle passed to a syscall. To access the current KProcess object which has the handle table, we can use the per-core context stored in register X18 as of 5.0.0 (prior to Horizon implementing ASLR, it was stored in a hardcoded address per-CPU) and the handle table can be accessed through the current KProcess object. Printf debugging was extremely useful while figuring out exactly how KProcess has its fields laid out since the structure changed slightly between versions, and with a bit of reversing added in it's not particularly difficult to figure out exactly where the KProcessHandleTable is at how handles are translated into objects.

Probably the most useful fields in KProcess/KThread, in our case, are the process name and title ID, the handle table, and the active thread's local storage, where all IPC packets are read from and written to. To give a quick overview on how Switch IPC generally works, services are able to register a named port to wait for communications on which can be connected to by other processes via svcConnectToNamedPort. In practice, Nintendo only uses globally-accessible named ports for their service manager, `sm:`. On a successful call to svcConnectToNamedPort, processes recieve a KClientSession handle for sm: which allows those processes to send IPC requests to the service manager to either register/unregister/connect to 'private' named ports managed by the service manager, with sm checking whether the requesting process actually has access to said service.

From a practicality standpoint, since so much communication relies on IPC the most obvious mechanism to establish is a system for hooking requests going into specific named ports, both globally accessible ones such as sm: and private ones provided by sm itself. This kinda leads into why it's important to have access to the underlying KClientSession objects as opposed to trying to track handles; mapping out exactly which KProcess' handles go to what, while also tracking where handles might be copied and moved to is an almost impossible task, however mapping specific KClientSessions to specific handlers avoids the handle copying/moving issue since the KClientSession object pointer does not change in those cases.

Additionally, many interfaces aren't actually accessible from either svcConnectToNamedPort nor sm, as is the case with fsp-srv which gives out IFileSystem handles for different storage mediums. However, by providing a generic means for mapping KClientSession objects to specific intercept handlers, you can set up a chain of handlers registering handlers. For example, intercepting a specific eMMC partition's IFile's commands would involve setting up a handler for the sm global port, and then from that handler setting up a handler for any returned fsp-srv handles, and then from the fsp-srv handler checking for the OpenBisFileSystem command for a specific partition to hook the IFileSystem to a handler, which can have its OpenFile command hooked to hook any outgoing IFile handles to a specific handler for IFiles from that eMMC partition. From that point all incoming and outgoing data from that IFile's KClientSession can be modified and tweaked.

Finally, in order to prevent issues with KProcess handle tables being exhausted, Nintendo provided virtual handle system implemented in userland for services which manage large amounts of sessions. Effectively, a central KClientSession is able to give out multiple virtual handles (with virtual handles given out by virtual interfaces) only accessible through that KClientSession handle. As such, a process can take a service such as fsp-srv and with a single handle can manage hundreds of virtual interfaces and sub-interfaces, easing handle table pressure on both the client and server ends. These handles can be accommodated for by watching for KClientSession->virtual handle conversion, and then keeping mappings for KClientSession-virtual ID pairs. And again, since copied/moved KClientSessions keep their same pointer, in the event that somehow the central handle and a bunch of domain IDs were copied to another process, they would still function correctly.

Tying it All Together

Let's take a look at what it would take to boot homebrew via hbloader utilizing only SVC interception. The key interface of interest is fsp-ldr, which offers command 0 OpenCodeFileSystem taking a TID argument and an NCA path. From a userland replacement standpoint, booting homebrew involves redirecting the returned IFileSystem to be one from the SD card rather than one from fsp-ldr, since Loader (the process accessing fsp-ldr) doesn't really do any authentication on NSOs/NPDMs, only FS does. From a kernel standpoint, we just need to watch for an IPC packet sent to fsp-ldr for that command, hook the resulting handle, and then for each OpenFile request check if an SD card file can better override it. From there, swap handles and now Loader is reading from an IFile on the SD card rather than an NCA.

Taking a few steps back, there's obviously a few things to keep in mind: Loader never actually accesses the SD card, in fact it doesn't even ask for a fsp-srv handle. Since it is a builtin service it has permissions for everything, but the issue still remains of actually making sure handles can be gotten and swapped in. As it turns out, however, calling SVC handlers from SVCs is more than possible, so if Loader sends a request to sm asking for fsp-ldr, we can send out a request for fsp-srv, initialize it, and then send out the original request without Loader even knowing.

Interestingly, the first thing Loader does with its fsp-ldr handle is it converts it into a virtual domain handle, so that all OpenCodeFileSystem handles are virtual handles. This does make working with it a little more tricky since the code filesystem and code files all operate under the same KClientSession object, but it was an issue which needed resolving anyhow. For SD card IFile sessions, it also means that we have to convert them to virtual handles and then swap both the file KClientSession handle and the file virtual handle ID, while also watching for their virtual ID to close so that we can close our handles at the same time and avoid leakage.

A few other tricks are required to properly emulate the SD redirection experience: swapping in handles isn't the only concern, it's also important to ensure that if the SD card *doesn't* have a file then that error should be returned instead, and if the SD card has a file which doesn't exist in the original IFileSystem, we still need a file handle to replace. To accomodate for this, the original FileOpen request is altered to always open "/main" and if the SD card errors, that virtual handle is closed, and otherwise the SD handles are swapped in.

The end result is, of course, the homebrew launcher being accessible off boot from the album applet:

youtube

Other Potential Thoughts for Kernel Stuff

* Process patching is as easy as hooking svcUnmapProcessMemory and patching the memory before it's unmapped from Loader. Same goes for NROs but with different SVCs, all .text ultimately passes through kernel. * Reply spoofing. IPC requests can simply return without ever calling the SVC, by having kernel write in a reply it wants the process to see. * SVC additions. I'm not personally a fan of this because it starts to introduce ABIs specific to the custom firmware, but it's possible. One of the things I'm not personally a fan of with Luma3DS was that they added a bunch of system calls in order to access things which, quite frankly, were better left managed in kernel. The kernel patches for fs_mitm also violate this. Userland processes shouldn't be messing with handle information and translation tables, i m o. That's hacky. * Virtual services and handles. Since the intercept is able to spoof anything and everything a userland process knows, it can provide fake handles which can map to a service which lies entirely in kernel space. * IPC augmentation: Since any IPC request can be hooked, it can be possible to insert requests inbetween expected ones. One interesting application might be watching for outgoing HID requests and then, on the fly, translating these requests to the protocol of another controller which also operates using HID. * IPC forwarding: similar to augmentation, packets can be forwarded to a userland process to be better handled. Unfortunately, kernel presents a lot of concurrency issues which can get really weird, especially since calling SVC handlers can schedule more threads that will run through the same code. * As currently implemented, A32 SVCs are not hooked, however this is really more an issue if you want to hook outgoing requests from A32 games like MK8, since services such as Loader will generally only operate in a 64-bit mode.

Source

Horizon emulator, https://github.com/shinyquagsire23/moooooooo

therainsdowninafrica, https://github.com/shinyquagsire23/therainsdowninafrica

2 notes

·

View notes

Text

Computer Operating Systems PS 1

Computer Operating Systems PS 1

ECE357:Computer Operating Systems PS 1/pg 1 Problem 1 — What is a system call and what is not? Consult the UNIX documentation, e.g. the man pages, to determine which of the following are actual system calls. For extra credit, if the function in question is just a library function, tell me if you think it generally results in the call of an underlying system call, and if so, which one name syscall…

View On WordPress

0 notes

Text

Redox 0x01

Introductory session

…and here we go! All excited. A first calendar entry to describe my attempt on ARM64 support in Redox OS. Specifically, looking into the Raspberry Pi2/3(B)/3+ (all of them having a Cortex-A53 ARMv8 64-bit microprocessor, although for all my experiments I am going to use the Raspberry Pi 3(B)).

Yesterday, I had my first meeting in Cambridge with @microcolonel! Very very inspiring, got many ideas and motivation. He reminded me that the first and most important thing I fell in love with Open Source is its people :)

Discussion Points

Everything started with a personal introduction, background and motivation reasons that we both participate in this project. It’s very important to note that we don’t want it to be a one-off thing but definitely the start of a longer support and experimentation with OS support and ARM.

Redox boot flow on AArch64

Some of the points discussed:

* boot * debug * MMU setup * TLS * syscalls `</pre> The current work by @microcolonel, is happening on the realms of `qemu-system-aarch64` platform. But what should I need to put my attention, when porting to the Rpi3? Here are some importants bits: <pre>`[ ] Typical AArch64 exception level transitions post reset: EL3 -> EL2 -> EL1 [x] Setting up a buildable u-boot (preferably the u-boot mainline) for RPi3 [ ] Setting up a BOOTP/TFTP server on the same subnet as the RPi3 [ ] Packaging the redox kernel binary as a (fake) Linux binary using u-boot's mkimage tool [ ] Obtaining an FDT blob for the RPi3 (Linux's DTB can be used for this). In hindsight, u-boot might be able to provide this too (u-boot's own generated ) [ ] Serving the packaged redox kernel binary as well as the FDT blob to u-boot via BOOT/TFTP [x] Statically expressing a suitable PL011 UART's physical base address within Redox as an initial debug console `</pre> Note: I’ve already completed (as shown) two important steps, which I am going to describe on my next blog post (to keep you excited ;-) **Challenges with recursive paging for AArch64** @microcolonel is very fond of recursive paging. He seems to succesfully to make it work on qemu and it seems that it may be possible in sillicon as well. This is for 48-bit Virtual Addresses with 4 levels of translation. As AArch64 has separate descriptors for page tables and pages which means that in order for recursive paging to work there must not be any disjoint bitfields in the two descriptor types. This is the case today but it is not clear if this will remain in the future. The problem is that if recursive paging doesn’t work on the physical implementation that may time much longer than expected to port for the RPi3. Another point, is that as opposed to x86_64, AArch6 has a separate translation scheme for user-space and kernel space. So while x86_64 has a single cr3 register containing the base address of the trnslation tables, AArch64 has two registers, ttbr_el0 for user-space and ttbr_el1 for the kernel. In this realm, there has been @microcolonel’s work to extend the paging schemes in Redox to cope with this. **TLS, Syscalls and Device Drivers** The Redox kernel’s reliance on Rust’s #[thread_local] attribute results in llvm generating references to the tpidr_el0 register. On AArch64 tpidr_el0 is supposed to contain the user-space TLS region’s base address. This is separate from tpidr_el1 which is supposted to contain the kernel-space TLS region’s base address. To fix this, @microcolonel has modified llvm such that the use of a ‘kernel’ code-model and an aarch64-unknown-redox target results in the emission og tpidr_el1. TLS support is underway at present. **Device drivers and FDT** For the device driver operation using fdt it’s very important to note the following: <pre>`* It will be important to create a registry of all the device drivers present * All device drivers will need to implement a trait that requires publishing of a device-tree compatible string property * As such, init code can then match the compatible string with the tree of nodes in the device tree in order to match drivers to their respective data elements in the tree `</pre> ** ** **Availability of @microcolonel’s code base** As he still expects his employer’s open source contribution approval there are still many steps to be done to port Redox OS. The structure of the code to be published was also discussed. At present @microcolonel’s work is a set of patches to the following repositories: <pre>`* Top level redox checkout (build glue etc) * Redox kernel submodule (core AArch64 support) * Redox syscall's submodule (AArch64 syscall support) * Redox's rust submodule (TLS support, redox toolchain triplet support) `</pre> Possible ways to manage the publishing of this code were also discussed. One way is to create AArch64 branches for all of the above and push them out to the redox github. This is TBD with **@jackpot51**. **Feature parity with x86_64** It’s very important to stay aligned with the current x86_64 port and for that reason the following work is important to be underways: <pre>`* Syscall implementation * Context switch support * kmain -> init invocation * Filesystem with apps * Framebuffer driver * Multi-core support * (...) (to be filled with a whole list of the current x86_64 features) `</pre> _Attaining feature parity would be the first concrete milestone for the AArch64 port as a whole._ **My next steps** As a result of the discussion and mentoring, the following steps were decided for the future: <pre>`[x] Get to a point where u-boot can be built from source and installed on the RPi3 [x] Figure out the UART base and verify that the UART's data register can be written to from the u-boot CLI (which should provoke an immediate appearance of characters on the CLI) [ ] Setup a flow using BOOTP/DHCP and u-boot that allows Redox kernels and DTBs to be sent to u-boot over ethernet [ ] Once microcolonel's code has been published, start by hacking in the UART base address and a DTB blob [ ] Aim to reach kstart with println output up and running. `</pre> **Next steps for @microcolonel** <pre>`[ ] Complete TLS support [ ] Get Board and CPU identification + display going via DTB probes [ ] Verify kstart entry on silicon. microcolonel means to use the Lemaker Hikey620 Linaro 96Board for this. It's a Cortex-A53 based board just like the RPi3. The idea is to quickly check if recursive paging on silicon is OK. This can make wizofe's like a lot rosier. :) [ ] Make the UART base address retrieval dynamic via DTB (as opposed to the static fixed address used at present which isn't portable) [ ] Get init invocation from kmain going [ ] Implement necessary device driver identification traits and registry [ ] Implement GIC and timer drivers (Red Flag for RPi3 here, as it has no implementation of GIC but rather a closed propietary approach) [ ] Focus on user-land bring-up `</pre> **Future work** If we could pick up the most important plan for the future of Redox that would be a roadmap! Some of the critical items that should be discussed: <pre>`* Suitable tests and Continuous integration (perhaps with Jenkins) * A pathway to run Linux applications under Redox. FreeBSD's linuxulator (system call translator) would be one way to do this. This would make complex applications such as firefox etc usable until native solutions become available in the longer term. * Self hosted development. Having redox bootable on a couple of popular laptops with a focus on featurefulness will go a great way in terms of perception. System76 dual boot with Pop_OS! ? ;) * A strategy to support hardware assisted virtualization.

Thanks

Thanks for reading! Hope to see you next time here. For any questions feel free to email me: code -@- wizofe dot uk. Many many insights are taken from @microcolonel’s very detailed summary; The following part of the blog is my own experimentation and exploration on the discussed matters!

2 notes

·

View notes

Text

syscall Paging system Industries

1 note

·

View note

Text

Updated MySQL OSMetrics Plugins

It has been some time since I have posted updates to my plugins. After the initial version, I decided to split the plugins into categories of metrics. This will allow users to choose whether they want to install all of the plugins or only select ones they care about. Since the installation process is unfamiliar to many users, I also expanded the instructions to make it a little easier to follow. Moreover, I added a Makefile. I have also reformatted the output of some plugins to be either horizontal or vertical in orientation. There is still more work to do in this area as well. Where to Get The MySQL Plugins You can get the plugins from GitHub at https://github.com/toritejutsu/osmetrics but they will have to be compiled from source. As mentioned above, you can choose whether to install all of them or one by one. If you have an interest, feel free to contribute to the code as it would make them much more useful to get more input and actual usage. What Are The Plugins? These are a collection of MySQL plugins for displaying Operating System metrics in INFORMATION_SCHEMA. This would allow monitoring tools, such as Percona Monitoring and Management (PMM), to retrieve these values remotely via the MySQL interface. Values are pulled via standard C library calls and some are read from the /proc filesystem so overhead is absolutely minimal. I added a couple of libraries originally to show that even Windows and other variants of UNIX can be utilized, but commented them out to keep it all simple for now. Many variables were added to show what was possible. Some of these may not be of interest. I just wanted to see what kind of stuff was possible and would tweak these over time. Also, none of the calculations were rounded. This was done just to keep precision for the graphing of values but could easily be changed later. If there is interest, this could be expanded to add more metrics and unnecessary ones removed. Just looking for feedback. Keep in mind that my C programming skills are rusty and I am sure the code could be cleaned up. Make sure you have the source code for MySQL and have done a cmake on it. This will be necessary to compile the plugin as well as some SQL install scripts. Preparing The Environment Below is the way that I compiled the plugin. You will obviously need to make changes to match your environment. You will also need to have the Percona Server for MySQL source code on your server: wget https://www.percona.com/downloads/Percona-Server-5.7/Percona-Server-5.7.17-13/source/tarball/percona-server-5.7.17-13.tar.gz Uncompress the file and go into the directory: tar -zxvf percona-server-5.7.17-13.tar.gz cd percona-server-5.7.17-13 I also had to add a few utilities: sudo yum install cmake sudo yum install boost sudo yum install ncurses-devel sudo yum install readline-devel cmake -DDOWNLOAD_BOOST=1 -DWITH_BOOST=.. Compiling The Plugins First, you will need to put the plugin code in the plugin directory of the source code you downloaded. For me, this was “/home/ec2-user/percona-server-5.7.17-13/plugin” and I named the directory “osmetrics”. Of course, you can just do a “git” to retrieve this to your server or download it as a zip file and decompress it. Just make sure it is placed into the “plugin” directory of the source code as noted above. Next, you will need to know where your MySQL plugin directory is located. You can query that with the following SQL: mysql> SHOW GLOBAL VARIABLES LIKE "%plugin_dir%"; +---------------+-------------------------+ | Variable_name | Value | +---------------+-------------------------+ | plugin_dir | /jet/var/mysqld/plugin/ | +---------------+-------------------------+ 1 row in set (0.01 sec) You will then need to edit the Makefile and define this path there. Once that is complete, you can compile the plugins: make clean make make install Installing The Plugins Finally, you can log in to MySQL and activate the plugins: mysql> INSTALL PLUGIN OS_CPU SONAME 'osmetrics-cpu.so'; mysql> INSTALL PLUGIN OS_CPUGOVERNOR SONAME 'osmetrics-cpugovernor.so'; mysql> INSTALL PLUGIN OS_CPUINFO SONAME 'osmetrics-cpuinfo.so'; mysql> INSTALL PLUGIN OS_IOSCHEDULER SONAME 'osmetrics-ioscheduler.so'; mysql> INSTALL PLUGIN OS_DISKSTATS SONAME 'osmetrics-diskstats.so'; mysql> INSTALL PLUGIN OS_LOADAVG SONAME 'osmetrics-loadavg.so'; mysql> INSTALL PLUGIN OS_MEMINFO SONAME 'osmetrics-meminfo.so'; mysql> INSTALL PLUGIN OS_MEMORY SONAME 'osmetrics-memory.so'; mysql> INSTALL PLUGIN OS_MISC SONAME 'osmetrics-misc.so'; mysql> INSTALL PLUGIN OS_MOUNTS SONAME 'osmetrics-mounts.so'; mysql> INSTALL PLUGIN OS_NETWORK SONAME 'osmetrics-network.so'; mysql> INSTALL PLUGIN OS_STAT SONAME 'osmetrics-stat.so'; mysql> INSTALL PLUGIN OS_SWAPINFO SONAME 'osmetrics-swapinfo.so'; mysql> INSTALL PLUGIN OS_VERSION SONAME 'osmetrics-version.so'; mysql> INSTALL PLUGIN OS_VMSTAT SONAME 'osmetrics-vmstat.so'; Alternatively, you can run the install SQL script: mysql> SOURCE /path/to/install_plugins.sql Verify Installation If all went well, you should see several new plugins available. Just make sure the status is “ACTIVE.” mysql> SHOW PLUGINS; +-----------------------------+----------+--------------------+----------------------------+---------+ | Name | Status | Type | Library | License | +-----------------------------+----------+--------------------+----------------------------+---------+ ... | OS_CPU | ACTIVE | INFORMATION SCHEMA | osmetrics-cpu.so | GPL | | OS_GOVERNOR | ACTIVE | INFORMATION SCHEMA | osmetrics-cpugovernor.so | GPL | | OS_CPUINFO | ACTIVE | INFORMATION SCHEMA | osmetrics-cpuinfo.so | GPL | | OS_DISKSTATS | ACTIVE | INFORMATION SCHEMA | osmetrics-diskstats.so | GPL | | OS_IOSCHEDULER | ACTIVE | INFORMATION SCHEMA | osmetrics-diskscheduler.so | GPL | | OS_LOADAVG | ACTIVE | INFORMATION SCHEMA | osmetrics-loadavg.so | GPL | | OS_MEMINFO | ACTIVE | INFORMATION SCHEMA | osmetrics-meminfo.so | GPL | | OS_MEMORY | ACTIVE | INFORMATION SCHEMA | osmetrics-memory.so | GPL | | OS_MISC | ACTIVE | INFORMATION SCHEMA | osmetrics-misc.so | GPL | | OS_MOUNTS | ACTIVE | INFORMATION SCHEMA | osmetrics-mounts.so | GPL | | OS_NETWORK | ACTIVE | INFORMATION SCHEMA | osmetrics-network.so | GPL | | OS_STAT | ACTIVE | INFORMATION SCHEMA | osmetrics-stat.so | GPL | | OS_SWAPINFO | ACTIVE | INFORMATION SCHEMA | osmetrics-swapinfo.so | GPL | | OS_VERSION | ACTIVE | INFORMATION SCHEMA | osmetrics-version.so | GPL | | OS_VMSTAT | ACTIVE | INFORMATION SCHEMA | osmetrics-vmstat.so | GPL | +-----------------------------+----------+--------------------+----------------------------+---------+ Querying The Plugins Let’s look at some example output from each of the plugins below: mysql> SELECT * FROM INFORMATION_SCHEMA.OS_CPU; +---------------+------------+--------------------------------------------------------------------+ | name | value | comment | +---------------+------------+--------------------------------------------------------------------+ | numcores | 1 | Number of virtual CPU cores | | speed | 2299.892 | CPU speed in MHz | | bogomips | 4600.08 | CPU bogomips | | user | 0 | Normal processes executing in user mode | | nice | 4213 | Niced processes executing in user mode | | sys | 610627 | Processes executing in kernel mode | | idle | 524 | Processes which are idle | | iowait | 0 | Processes waiting for I/O to complete | | irq | 9 | Processes servicing interrupts | | softirq | 765 | Processes servicing Softirqs | | guest | 0 | Processes running a guest | | guest_nice | 0 | Processes running a niced guest | | intr | 200642 | Count of interrupts serviced since boot time | | ctxt | 434493 | Total number of context switches across all CPUs | | btime | 1595891204 | Ttime at which the system booted, in seconds since the Unix epoch | | processes | 9270 | Number of processes and threads created | | procs_running | 3 | Total number of threads that are running or ready to run | | procs_blocked | 0 | Number of processes currently blocked, waiting for I/O to complete | | softirq | 765 | Counts of softirqs serviced since boot time | | idle_pct | 0.09 | Average CPU idle time | | util_pct | 99.91 | Average CPU utilization | | procs | 120 | Number of current processes | | uptime_tv_sec | 1 | User CPU time used (in seconds) | | utime_tv_usec | 943740 | User CPU time used (in microseconds) | | stime_tv_sec | 1 | System CPU time (in seconds) | | stime_tv_usec | 315574 | System CPU time (in microseconds) | | utime | 1.94374 | Total user time | | stime | 1.315574 | Total system time | | minflt | 34783 | Page reclaims (soft page faults) | | majflt | 0 | Page faults | | nvcsw | 503 | Number of voluntary context switches | | nivcsw | 135 | Number of involuntary context switches | +---------------+------------+--------------------------------------------------------------------+ 32 rows in set (0.00 sec) mysql> SELECT * FROM INFORMATION_SCHEMA.OS_CPUGOVERNOR; +--------+-------------+ | name | governor | +--------+-------------+ | cpu0 | performance | +--------+-------------+ 1 row in set (0.00 sec) mysql> SELECT * FROM INFORMATION_SCHEMA.OS_CPUINFO; +-----------+--------------+------------+-------+-------------------------------------------+----------+-----------+----------+------------+-------------+----------+---------+-----------+--------+----------------+-----+---------------+-------------+-----+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+------------------------------------------------------------------------------------+----------+--------------+-----------------+-----------------------------------+------------------+ | processor | vendor_id | cpu_family | model | model_name | stepping | microcode | cpu_MHz | cache_size | physical_id | siblings | core_id | cpu_cores | apicid | initial_apicid | fpu | fpu_exception | cpuid_level | wp | flags | bugs | bogomips | clflush_size | cache_alignment | address_sizes | power_management | +-----------+--------------+------------+-------+-------------------------------------------+----------+-----------+----------+------------+-------------+----------+---------+-----------+--------+----------------+-----+---------------+-------------+-----+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+------------------------------------------------------------------------------------+----------+--------------+-----------------+-----------------------------------+------------------+ | 0 | GenuineIntel | 6 | 63 | Intel(R) Xeon(R) CPU E5-2676 v3 @ 2.40GHz | 2 | 0x43 | 2400.005 | 30720 KB | 0 | 1 | 0 | 1 | 0 | 0 | yes | yes | 13 | yes | fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx rdtscp lm constant_tsc rep_good nopl xtopology cpuid pni pclmulqdq ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave a | cpu_meltdown spectre_v1 spectre_v2 spec_store_bypass l1tf mds swapgs itlb_multihit | 4800.11 | 64 | 64 | 46 bits physical, 48 bits virtual | | +-----------+--------------+------------+-------+-------------------------------------------+----------+-----------+----------+------------+-------------+----------+---------+-----------+--------+----------------+-----+---------------+-------------+-----+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+------------------------------------------------------------------------------------+----------+--------------+-----------------+-----------------------------------+------------------+ 1 row in set (0.00 sec) mysql> SELECT * FROM INFORMATION_SCHEMA.OS_IOSCHEDULER; +--------+-----------+ | device | scheduler | +--------+-----------+ | xvda | [noop] | +--------+-----------+ 1 row in set (0.00 sec) mysql> SELECT * FROM INFORMATION_SCHEMA.OS_DISKSTATS; +-----------+-----------+--------+---------------+--------------+--------------+---------------+----------------+---------------+-----------------+----------------+-----------------+------------------+---------------------------+ | major_num | minor_num | device | reads_success | reads_merged | sectors_read | time_reads_ms | writes_success | writes_merged | sectors_written | time_writes_ms | ios_in_progress | time_doing_io_ms | weighted_time_doing_io_ms | +-----------+-----------+--------+---------------+--------------+--------------+---------------+----------------+---------------+-----------------+----------------+-----------------+------------------+---------------------------+ | 202 | 0 | xvda | 10286 | 10 | 472913 | 7312 | 4137 | 2472 | 351864 | 14276 | 0 | 4452 | 21580 | | 202 | 1 | xvda1 | 10209 | 10 | 468929 | 7280 | 4137 | 2472 | 351864 | 14276 | 0 | 4436 | 21548 | | 202 | 2 | xvda2 | 40 | 0 | 3504 | 24 | 0 | 0 | 0 | 0 | 0 | 24 | 24 | +-----------+-----------+--------+---------------+--------------+--------------+---------------+----------------+---------------+-----------------+----------------+-----------------+------------------+---------------------------+ 3 rows in set (0.00 sec) mysql> SELECT * FROM INFORMATION_SCHEMA.OS_LOADAVG; +--------+-------+------------------------+ | name | value | comment | +--------+-------+------------------------+ | 1_min | 0.09 | 1 minute load average | | 5_min | 0.02 | 5 minute load average | | 15_min | 0.01 | 15 minute load average | +--------+-------+------------------------+ 3 rows in set (0.00 sec) mysql> SELECT * FROM INFORMATION_SCHEMA.OS_MEMINFO; +-----------------+------------+ | name | value | +-----------------+------------+ | MemTotal | 2090319872 | | MemFree | 1658920960 | | MemAvailable | 1762938880 | | Buffers | 22573056 | | Cached | 206209024 | | SwapCached | 0 | | Active | 284999680 | | Inactive | 100868096 | | Active(anon) | 156110848 | | Inactive(anon) | 53248 | | Active(file) | 128888832 | | Inactive(file) | 100814848 | | Unevictable | 0 | | Mlocked | 0 | | SwapTotal | 0 | | SwapFree | 0 | | Dirty | 811008 | | Writeback | 0 | | AnonPages | 157085696 | | Mapped | 53223424 | | Shmem | 65536 | | Slab | 29102080 | | SReclaimable | 18337792 | | SUnreclaim | 10764288 | | KernelStack | 2162688 | | PageTables | 3444736 | | NFS_Unstable | 0 | | Bounce | 0 | | WritebackTmp | 0 | | CommitLimit | 1045159936 | | Committed_AS | 770662400 | | VmallocTotal | 4294966272 | | VmallocUsed | 0 | | VmallocChunk | 0 | | AnonHugePages | 0 | | ShmemHugePages | 0 | | ShmemPmdMapped | 0 | | HugePages_Total | 0 | | HugePages_Free | 0 | | HugePages_Rsvd | 0 | | HugePages_Surp | 0 | | Hugepagesize | 2097152 | | DirectMap4k | 60817408 | | DirectMap2M | 2086666240 | +-----------------+------------+ 44 rows in set (0.00 sec) mysql> SELECT * FROM INFORMATION_SCHEMA.OS_MEMORY; +----------------+-----------------------+--------------------------------------+ | name | value | comment | +----------------+-----------------------+--------------------------------------+ | total_ram | 2090319872 | Total usable main memory size | | free_ram | 1452339200 | Available memory size | | used_ram | 637980672 | Used memory size | | free_ram_pct | 69.48 | Available memory as a percentage | | used_ram_pct | 30.52 | Free memory as a percentage | | shared_ram | 61440 | Amount of shared memory | | buffer_ram | 108040192 | Memory used by buffers | | total_high_ram | 0 | Total high memory size | | free_high_ram | 0 | Available high memory size | | total_low_ram | 2090319872 | Total low memory size | | free_low_ram | 1452339200 | Available low memory size | | maxrss | 140308942222128 | Maximum resident set size | +----------------+-----------------------+--------------------------------------+ 12 rows in set (0.00 sec) mysql> SELECT * FROM INFORMATION_SCHEMA.OS_MISC; +-----------------------+-------------------+-------------------------------------------------+ | name | value | comment | +-----------------------+-------------------+-------------------------------------------------+ | datadir_size | 8318783488 | MySQL data directory size | | datadir_size_free | 2277470208 | MySQL data directory size free space | | datadir_size_used | 6041313280 | MySQL data directory size used space | | datadir_size_used_pct | 72.62 | MySQL data directory used space as a percentage | | uptime | 100026 | Uptime (in seconds) | | uptime_days | 1 | Uptime (in days) | | uptime_hours | 27 | Uptime (in hours) | | procs | 122 | Number of current processes | | swappiness | 60 | Swappiness setting | +-----------------------+-------------------+-------------------------------------------------+ 9 rows in set (0.00 sec) mysql> SELECT * FROM INFORMATION_SCHEMA.OS_MOUNTS; +------------+--------------------------+------------------+---------------------------+ | device | mount_point | file_system_type | mount_options | +------------+--------------------------+------------------+---------------------------+ | proc | /proc | proc | rw,relatime | | sysfs | /sys | sysfs | rw,relatime | | devtmpfs | /dev | devtmpfs | rw,relatime,size=1010060k | | devpts | /dev/pts | devpts | rw,relatime,gid=5,mode=62 | | tmpfs | /dev/shm | tmpfs | rw,relatime | | /dev/xvda1 | / | ext4 | rw,noatime,data=ordered | | devpts | /dev/pts | devpts | rw,relatime,gid=5,mode=62 | | none | /proc/sys/fs/binfmt_misc | binfmt_misc | rw,relatime | +------------+--------------------------+------------------+---------------------------+ 8 rows in set (0.00 sec) mysql> SELECT * FROM INFORMATION_SCHEMA.OS_NETWORK; +-----------+------------+------------+----------+----------+------------+------------+-----------+-----------+ | interface | tx_packets | rx_packets | tx_bytes | rx_bytes | tx_dropped | rx_dropped | tx_errors | rx_errors | +-----------+------------+------------+----------+----------+------------+------------+-----------+-----------+ | lo | 26528 | 26528 | 1380012 | 1380012 | 0 | 0 | 0 | 0 | | eth0 | 102533 | 144031 | 16962983 | 23600676 | 0 | 0 | 0 | 0 | +-----------+------------+------------+----------+----------+------------+------------+-----------+-----------+ 2 rows in set (0.00 sec) mysql> SELECT * FROM INFORMATION_SCHEMA.OS_STAT; +------+----------+-------+------+------+---------+--------+-------+---------+--------+---------+--------+---------+-------+-------+--------+--------+----------+------+-------------+-------------+-----------+------------+-------+-----------------------+-----------+----------+-----------------+---------+---------+--------+---------+-----------+----------+-------+-------+--------+-------------+-----------+-------------+--------+-----------------------+------------+-------------+------------+----------+-----------+-----------------+-----------------+-----------------+-----------------+-----------+ | pid | comm | state | ppid | pgrp | session | tty_nr | tpgid | flags | minflt | cminflt | majflt | cmajflt | utime | stime | cutime | cstime | priority | nice | num_threads | itrealvalue | starttime | vsize | rss | rsslim | startcode | endcode | startstack | kstkeep | kstkeip | signal | blocked | sigignore | sigcatch | wchan | nswap | cnswap | exit_signal | processor | rt_priority | policy | delayacct_blkio_ticks | guest_time | cguest_time | start_data | end_data | start_brk | arg_start | arg_end | env_start | env_end | exit_code | +------+----------+-------+------+------+---------+--------+-------+---------+--------+---------+--------+---------+-------+-------+--------+--------+----------+------+-------------+-------------+-----------+------------+-------+-----------------------+-----------+----------+-----------------+---------+---------+--------+---------+-----------+----------+-------+-------+--------+-------------+-----------+-------------+--------+-----------------------+------------+-------------+------------+----------+-----------+-----------------+-----------------+-----------------+-----------------+-----------+ | 6656 | (mysqld) | S | 2030 | 1896 | 1896 | 0 | -1 | 4194304 | 34784 | 0 | 0 | 0 | 96 | 55 | 0 | 0 | 20 | 0 | 29 | 0 | 965078 | 1153900544 | 37324 | 1.8446744073709552e19 | 4194304 | 27414570 | 140728454321408 | 0 | 0 | 0 | 540679 | 12294 | 1768 | 0 | 0 | 0 | 17 | 0 | 0 | 0 | 4 | 0 | 0 | 29511728 | 31209920 | 36462592 | 140728454327797 | 140728454328040 | 140728454328040 | 140728454328281 | 0 | +------+----------+-------+------+------+---------+--------+-------+---------+--------+---------+--------+---------+-------+-------+--------+--------+----------+------+-------------+-------------+-----------+------------+-------+-----------------------+-----------+----------+-----------------+---------+---------+--------+---------+-----------+----------+-------+-------+--------+-------------+-----------+-------------+--------+-----------------------+------------+-------------+------------+----------+-----------+-----------------+-----------------+-----------------+-----------------+-----------+ 1 row in set (0.00 sec) mysql> SELECT * FROM INFORMATION_SCHEMA.OS_SWAPINFO; +---------------+-------+--------------------------------------+ | name | value | comment | +---------------+-------+--------------------------------------+ | total_swap | 0 | Total swap space size | | free_swap | 0 | Swap space available | | used_swap | 0 | Swap space used | | free_swap_pct | 0 | Swap space available as a percentage | | used_swap_pct | 0 | Swap space used as a percentage | +---------------+-------+--------------------------------------+ 5 rows in set (0.00 sec) mysql> SELECT * FROM INFORMATION_SCHEMA.OS_VMSTAT; +---------------------------+----------+ | name | value | +---------------------------+----------+ | nr_free_pages | 354544 | | nr_zone_inactive_anon | 13 | | nr_zone_active_anon | 38257 | | nr_zone_inactive_file | 30742 | | nr_zone_active_file | 70367 | | nr_zone_unevictable | 0 | | nr_zone_write_pending | 9 | | nr_mlock | 0 | | nr_page_table_pages | 854 | | nr_kernel_stack | 2176 | | nr_bounce | 0 | | nr_zspages | 0 | | nr_free_cma | 0 | | numa_hit | 14011351 | | numa_miss | 0 | | numa_foreign | 0 | | numa_interleave | 15133 | | numa_local | 14011351 | | numa_other | 0 | | nr_inactive_anon | 13 | | nr_active_anon | 38257 | | nr_inactive_file | 30742 | | nr_active_file | 70367 | | nr_unevictable | 0 | | nr_slab_reclaimable | 9379 | | nr_slab_unreclaimable | 2829 | | nr_isolated_anon | 0 | | nr_isolated_file | 0 | | workingset_refault | 0 | | workingset_activate | 0 | | workingset_nodereclaim | 0 | | nr_anon_pages | 38504 | | nr_mapped | 13172 | | nr_file_pages | 100873 | | nr_dirty | 9 | | nr_writeback | 0 | | nr_writeback_temp | 0 | | nr_shmem | 15 | | nr_shmem_hugepages | 0 | | nr_shmem_pmdmapped | 0 | | nr_anon_transparent_hugep | 0 | | nr_unstable | 0 | | nr_vmscan_write | 0 | | nr_vmscan_immediate_recla | 0 | | nr_dirtied | 389218 | | nr_written | 381326 | | nr_dirty_threshold | 87339 | | nr_dirty_background_thres | 43616 | | pgpgin | 619972 | | pgpgout | 2180908 | | pswpin | 0 | | pswpout | 0 | | pgalloc_dma | 0 | | pgalloc_dma32 | 14085334 | | pgalloc_normal | 0 | | pgalloc_movable | 0 | | allocstall_dma | 0 | | allocstall_dma32 | 0 | | allocstall_normal | 0 | | allocstall_movable | 0 | | pgskip_dma | 0 | | pgskip_dma32 | 0 | | pgskip_normal | 0 | | pgskip_movable | 0 | | pgfree | 14440053 | | pgactivate | 55703 | | pgdeactivate | 1 | | pglazyfree | 249 | | pgfault | 14687206 | | pgmajfault | 1264 | | pglazyfreed | 0 | | pgrefill | 0 | | pgsteal_kswapd | 0 | | pgsteal_direct | 0 | | pgscan_kswapd | 0 | | pgscan_direct | 0 | | pgscan_direct_throttle | 0 | | zone_reclaim_failed | 0 | | pginodesteal | 0 | | slabs_scanned | 0 | | kswapd_inodesteal | 0 | | kswapd_low_wmark_hit_quic | 0 | | kswapd_high_wmark_hit_qui | 0 | | pageoutrun | 0 | | pgrotated | 44 | | drop_pagecache | 0 | | drop_slab | 0 | | oom_kill | 0 | | numa_pte_updates | 0 | | numa_huge_pte_updates | 0 | | numa_hint_faults | 0 | | numa_hint_faults_local | 0 | | numa_pages_migrated | 0 | | pgmigrate_success | 0 | | pgmigrate_fail | 0 | | compact_migrate_scanned | 0 | | compact_free_scanned | 0 | | compact_isolated | 0 | | compact_stall | 0 | | compact_fail | 0 | | compact_success | 0 | | compact_daemon_wake | 0 | | compact_daemon_migrate_sc | 0 | | compact_daemon_free_scann | 0 | | htlb_buddy_alloc_success | 0 | | htlb_buddy_alloc_fail | 0 | | unevictable_pgs_culled | 1300 | | unevictable_pgs_scanned | 0 | | unevictable_pgs_rescued | 266 | | unevictable_pgs_mlocked | 2626 | | unevictable_pgs_munlocked | 2626 | | unevictable_pgs_cleared | 0 | | unevictable_pgs_stranded | 0 | | thp_fault_alloc | 0 | | thp_fault_fallback | 0 | | thp_collapse_alloc | 0 | | thp_collapse_alloc_failed | 0 | | thp_file_alloc | 0 | | thp_file_mapped | 0 | | thp_split_page | 0 | | thp_split_page_failed | 0 | | thp_deferred_split_page | 0 | | thp_split_pmd | 0 | | thp_split_pud | 0 | | thp_zero_page_alloc | 0 | | thp_zero_page_alloc_faile | 0 | | thp_swpout | 0 | | thp_swpout_fallback | 0 | | swap_ra | 0 | | swap_ra_hit | 0 | +---------------------------+----------+ 130 rows in set (0.00 sec) mysql> SELECT * FROM INFORMATION_SCHEMA.OS_VERSION; +------------+---------------------------+ | name | value | +------------+---------------------------+ | sysname | Linux | | nodename | ip-172-31-25-133 | | release | 4.14.181-108.257.amzn1.x8 | | version | #1 SMP Wed May 27 02:43:0 | | machine | x86_64 | | domainname | (none) | +------------+---------------------------+ 6 rows in set (0.00 sec) Uninstalling The Plugins To uninstall the plugins, you can remove them with the following SQL commands. To completely remove them, you will need to remove them from your plugin directory. mysql> UNINSTALL PLUGIN OS_CPU; mysql> UNINSTALL PLUGIN OS_CPUGOVERNOR; mysql> UNINSTALL PLUGIN OS_CPUINFO; mysql> UNINSTALL PLUGIN OS_IOSCHEDULER; mysql> UNINSTALL PLUGIN OS_DISKSTATS; mysql> UNINSTALL PLUGIN OS_LOADAVG; mysql> UNINSTALL PLUGIN OS_MEMINFO; mysql> UNINSTALL PLUGIN OS_MEMORY; mysql> UNINSTALL PLUGIN OS_MISC; mysql> UNINSTALL PLUGIN OS_MOUNTS; mysql> UNINSTALL PLUGIN OS_NETWORK; mysql> UNINSTALL PLUGIN OS_STAT; mysql> UNINSTALL PLUGIN OS_SWAPINFO; mysql> UNINSTALL PLUGIN OS_VERSION; mysql> UNINSTALL PLUGIN OS_VMSTAT; Alternatively, you can run the uninstall SQL script: mysql> SOURCE /path/to/uninstall_plugins.sql What’s Next? Who knows! If there is enough interest, I would be happy to expand the plugins. First, I need to do some more code cleanup and performance test them. I do not expect them to have a significant performance impact, but one never knows until you test… https://www.percona.com/blog/2021/01/08/updated-mysql-osmetrics-plugins/

0 notes

Text

SA Wk5: Hiding

From the terminal, ls works through the getdirentries syscall. Hence, hooking this syscall will allow us to hide our malicious files from the user. Its implementation is found in /sys/kern/vfs_syscalls.c .

If sys_getdirentries is successful, it returns the number of bytes successfully read from the directory entries into td->td_retval. The directory entries are stored in the args struct in char *buf as dirent structs. Lucky for me, in COMP1521 I had just completed a lab exercise on file systems so I wouldn’t need to learn about dirent structs from scratch.

To hook the module, I will need to traverse buf after calling sys_getdirentries and remove the entry corresponding to the file I am trying to hide. For simplicity I will just hardcode the name of this file in.